Table Of Contents

- iSCSI: The Quick History Lesson

- What is NVMe?

- The Fundamental Difference of Spinning Disks and NVMe

- NVMe over Fabrics: Flash Storage on the Network

- NVMe over TCP vs iSCSI: The Comparison

- The Compelling Case for NVMe over TCP

- Choosing Between NVMe over TCP and iSCSI

- Simplyblock: Embracing Versatility

- The Future of NVMe over TCP

- Questions and Answers

TLDR: In a direct comparison of NVMe over TCP vs iSCSI, we see that NVMe over TCP outranks iSCSI in all categories with IOPS improvements of up to 50% (and more) and latency improvements by up to 34%.

When data grows, storage needs to grow, too. That’s when remotely attached SAN (Storage Area Network) systems come in. So far, these were commonly connected through one of three protocols: Fibre Channel, Infiniband, or iSCSI. However, with the latter being on the “low end” side of things, without the need for special hardware to operate. NMVe over Fabrics (NVMe-oF), and specifically NVMe over TCP (NVMe/TCP) as the successor of iSCSI, is on the rise to replace these legacy protocols and bring immediate improvements in latency, throughput, and IOPS.

iSCSI: The Quick History Lesson

iSCSI is a protocol that connects remote storage solutions (commonly hardware storage appliances) to storage clients. The latter are typically servers without (or with minimal) local storage, as well as virtual machines. In recent years, we have also seen iSCSI being used as a backend for container storage.

iSCSI stands for Internet Small Computer Storage Interface and encapsulates the standard SCSI commands within TCP/IP packets. That means that iSCSI works over commodity Ethernet networks, removing the need for specialized hardware such as network cards (NICs) and switches.

The iSCSI standard was first released in early 2000. A world that was very different from today. Do you remember what a phone looked like in 2000?

That said, while there was access to the first flash-based systems, prices were still outrageous, and storage systems were designed with spinning disks in mind. Remember that. We’ll come back to it later.

What is SCSI?

SCSI, or, you guessed it, the Small Computer Storage Interface, is a set of standards for connecting and transferring data between computers and peripheral devices. Originally developed in the 1980s, SCSI has been a foundational technology for data storage interfaces, supporting various device types, primarily hard drives, optical drives, and scanners.

While SCSI kept improving and adding new commands for technologies like NVMe. The foundation is still rooted in the early 1980s, though. However, many standards still use the SCSI command set, SATA (home computers), SAS (servers), and iSCSI.

What is NVMe?

Non-Volatile Memory Express (NVMe) is a modern PCI Express-based (PCI-e) storage interface. With the original specification dating back to 2011, NVMe is engineered specifically for solid-state drives (SSDs) connected via the PCIe bus. Therefore, NVMe devices are directly connected to the CPU and other devices on the bus to increase throughput and latency. NVMe dramatically reduces latency and increases input/output operations per second (IOPS) compared to traditional storage interfaces.

As part of the NVMe standard, additional specifications are developed, such as the transport specification which defines how NVMe commands are transported (e.g., via the PCI Express Bus, but also networking protocols like TCP/IP).

The Fundamental Difference of Spinning Disks and NVMe

Traditional spinning hard disk drives (HDDs) rely on physical spinning platters and moveable read/write heads to write or access data. When data is requested, the mechanical component must be physically placed in the correct location of the platter stack, resulting in significant access latencies ranging from 10-14 milliseconds.

Flash storage, including NVMe devices, eliminates the mechanical parts, utilizing NAND flash chips instead. NAND stores data purely electronically and achieves access latencies as low as 20 microseconds (and even lower on super high-end gear). That makes them 100 times faster than their HDD counterparts.

For a long time, flash storage had the massive disadvantage of limited storage capacity. However, this disadvantage slowly fades away with companies introducing higher-capacity devices. For example, Toshiba just announced a 180TB flash storage device.

Cost, the second significant disadvantage, also keeps falling with improvements in development and production. Technologies like QLC NAND offer incredible storage density for an affordable price.

Anyhow, why am I bringing up the mechanical vs electrical storage principle? The reason is simple: the access latency. SCSI and iSCSI were never designed for super low access latency devices because they didn’t really exist at the time of their development. And, while some adjustments were made to the protocol over the years, their fundamental design is outdated and can’t be changed for backward compatibility reasons.

NVMe over Fabrics: Flash Storage on the Network

NVMe over Fabrics (also known as NVMe-oF) is an extension to the NVMe base specification. It allows NVMe storage to be accessed over a network fabric while maintaining the low-latency, high-performance characteristics of local NVMe devices.

NVMe over Fabrics itself is a collection of multiple sub-specifications, defining multiple transport layer protocols.

- NVMe over TCP: NVMe/TCP utilizes the common internet standard protocol TCP/IP. It deploys on commodity Ethernet networks and can run parallel to existing network traffic. That makes NVMe over TCP the modern successor to iSCSI, taking over where iSCSI left off. Therefore, NVMe over TCP is the perfect solution for public cloud-based storage solutions that typically only provide TCP/IP networking.

- NVME over Fibre Channel: NVMe/FC builds upon the existing Fibre Channel network fabric. It tunnels NVMe commands through Fibre Channel packets and enables reusing available Fibre Channel hardware. I wouldn’t recommend it for new deployments due to the high entry cost of Fibre Channel equipment.

- NVMe over Infiniband: Like NVMe over Fibre Channel, NVMe/IB utilizes existing Infiniband networks to tunnel the NVMe protocol. If you have existing Infiniband equipment, NVMe over Infiniband might be your way. For new deployments, the initial entry cost is too high.

- NVME over RoCE: NVME over Converged Ethernet is a transport layer that uses an Ethernet fabric for remote direct memory access (RDMA). To use NVMe over RoCE, you need RDMA-capable NICs. RoCE comes in two versions: RoCEv1, which is a layer-2 protocol and not routable, and RoCEv2, which uses UDP/IP and can be routed across complex networks. NVMe over RoCE doesn’t scale as easily as NVMe over TCP but provides even lower latencies.

NVMe over TCP vs iSCSI: The Comparison

When comparing NVMe over TCP vs iSCSI, we see considerable improvements in all three primary metrics: latency, throughput, and IOPS.

The folks over at Blockbridge ran an extensive comparison of the two technologies, which shows that NVMe over TCP outperformed iSCSI, regardless of the benchmark.

I’ll provide the most critical benchmarks here, but I recommend you read through the full benchmark article right after finishing here.

Anyhow, let’s dive a little deeper into the actual facts on the NVMe over TCP vs iSCSI benchmark.

Editor’s Note: Our Developer Advocate, Chris Engelbert, gave a talk recently at SREcon in Dublin, talking about the performance between NVMe over TCP and iSCSI, which led to this blog post. Find the full presentation NVMe/TCP makes iSCSI look like Fortran.

Benchmarking Network Storage

Evaluating storage performance involves comparing four major performance indicators.

- IOPS: Number of input/output operations processed per second

- Latency: Time required to complete a single input/output operation

- Throughput: Total data transferred per unit of time

- Protocol Overhead: Additional processing required by the communication protocol

Editor’s note: For latency, throughput, and IOPS, we have an exhaustive blog post talking deeper about the necessities, their relationships, and how to calculate them.

A comprehensive performance testing involves simulated workloads that mirror real-world scenarios. To simplify this process, benchmarks use tools like FIO (Flexible I/O Tester) to generate consistent, reproducible test data and results across different storage configurations and systems.

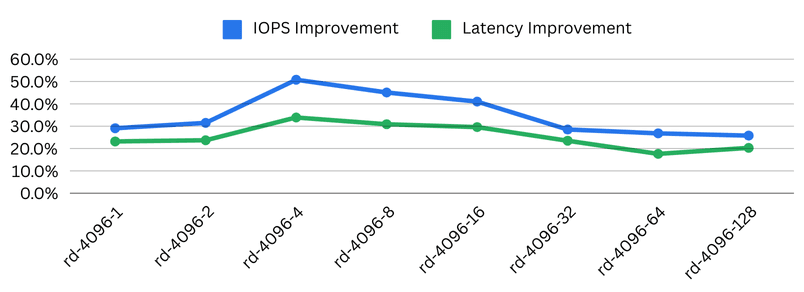

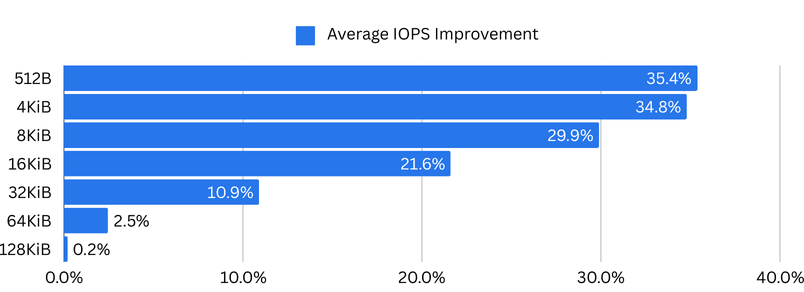

IOPS Improvements of NVMe over TCP vs iSCSI

Running IOPS-intensive applications, the number of available IOPS in a storage system is critical. IOPS-intensive application means systems such as databases, analytics platforms, asset servers, and similar solutions.

Improving IOPS by exchanging the storage-network protocol is an immediate win for the database and us.

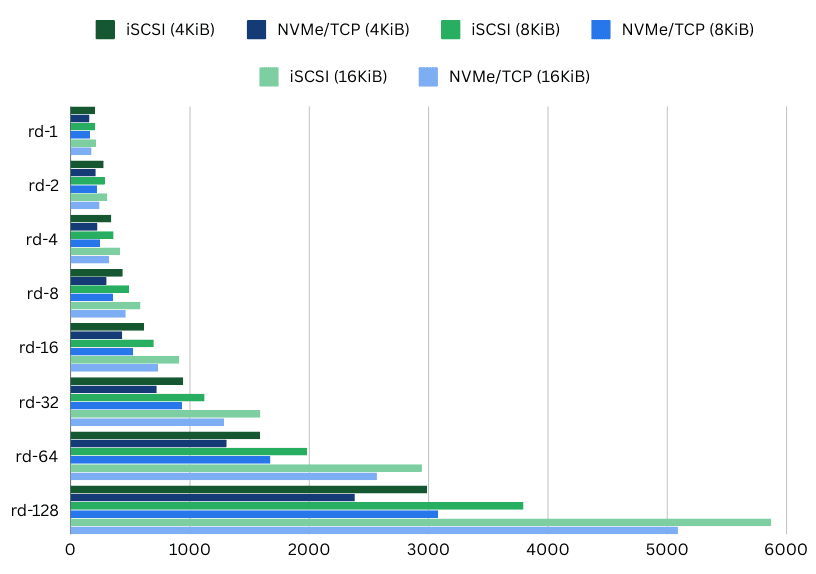

Using NVMe over TCP instead of iSCSI shows a dramatic increase in IOPS, especially for smaller block sizes. At 512 bytes block size, Blockbridge found an average 35.4% increase in IOPS. At a more common 4KiB block size, the average increase was 34.8%.

That means the same hardware can provide over one-third more IOPS using NVMe over TCP vs iSCSI at no additional cost.

Latency Improvements of NVMe over TCP vs iSCSI

While IOPS-hungry use cases, such as compaction events in databases (Cassandra), benefit from the immense increase in IOPS, latency-sensitive applications love low access latencies. Latency is the primary factor that causes people to choose local NVMe storage over remotely-attached storage, knowing about many or all drawbacks. If you’re looking to optimize latency-sensitive workloads, explore how local SSD caching can further reduce access latency in cloud environments.

Latency-sensitive applications range from high-frequency trading systems, where milliseconds are measured in hard money, over telecommunication systems, where latency can introduce issues with system synchronization, to cybersecurity and threat detection solutions that need to react as fast as possible.

Therefore, decreasing latency is a significant benefit for many industries and solutions. Apart from that, a lower access latency always speeds up data access, even if your system isn’t necessarily latency-sensitive. You will feel the difference.

Blockbridge found the most significant benefit in access latency reduction with a block size of 16KiB with a queue depth of 128 (which can easily be hit with I/O demanding solutions). The average latency for iSCSI was 5,871μs compared to NVMe over TCP with 5,089μs. A 782μs (~25%) decrease in access latency—just by exchanging the storage protocol.

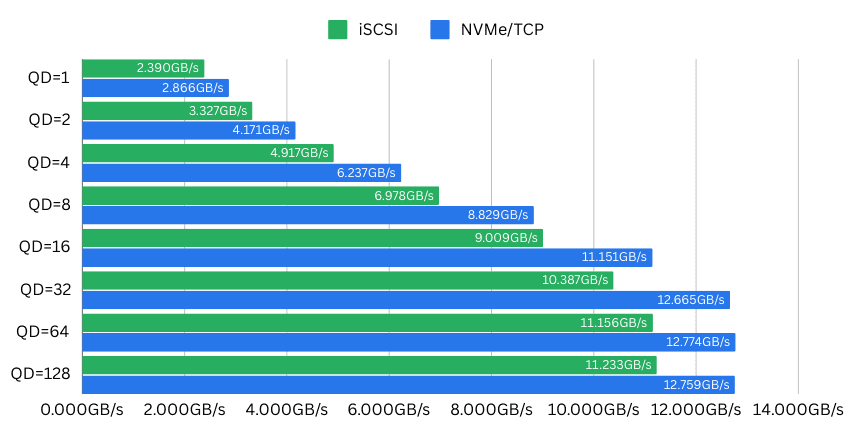

Throughput Improvement of NVMe over TCP vs iSCSI

As the third primary metric of storage performance, throughput describes how much data is actually pumped from the disk into your workload.

Throughput is the major factor for applications such as video encoding or streaming platforms, large analytical systems, and game servers streaming massive worlds into memory. Furthermore, there are also time-series storage, data lakes, and historian databases.

Throughput-heavy systems benefit from higher throughput to get the “job done faster.” Oftentimes, increasing the throughput isn’t easy. You’re either bound by the throughput provided by the disk or, in the case of a network-attached system, the network bandwidth. To achieve high throughput and capacity, remote network storage utilizes high bandwidth networking or specialized networking systems such as Fibre Channel or Infiniband.

Blockbridge ran their tests on a dual-port 100Gbit/s network card, limited by the PCI Express x16 Gen3 bus to a maximum throughput of around 126Gbit/s. Newer PCIe standards achieve much higher throughput. Hence, NVMe devices and NICs aren’t bound by the “limiting” factor of the PCIe bus anymore.

With a 16KiB block size and a queue depth of 32, their benchmark saw a whopping 2.3GB/s increase in performance on NVMe over TCP vs iSCSI. The throughput increased from 10.387GBit/s on iSCSI to 12.665GBit/s, an easy 20% on top—again, using the same hardware. That’s how you save money.

The Compelling Case for NVMe over TCP

We’ve seen that NVMe over TCP has significant performance advantages over iSCSI in all three primary storage performance metrics. Nevertheless, there are more advantages to NVMe over TCP vs iSCSI.

- Standard Ethernet: NVMe over TCP’s most significant advantage is its ability to operate over standard Ethernet networks. Unlike specialized networking technologies (Infiniband, Fibre Channel), NVMe/TCP requires no additional hardware investments or complex configuration, making it remarkably accessible for organizations of all sizes.

- Performance Characteristics: NVMe over TCP delivers exceptional performance by minimizing protocol overhead and leveraging the efficiency of NVMe’s design. It can achieve latencies comparable to local storage while providing the flexibility of network-attached resources. Modern implementations can sustain throughput rates exceeding traditional storage protocols by significant margins.

- Ease of Deployment: NVMe over TCP integrates seamlessly with Linux and Windows (Server 2025 and later) since the necessary drivers are already part of the kernel. That makes NVMe/TCP straightforward to implement and manage. Seamless compatibility reduces the learning curve and integration challenges typically associated with new storage technologies.

Choosing Between NVMe over TCP and iSCSI

Deciding between two technologies isn’t always easy. It isn’t that hard in the case of NVMe over TCP vs iSCSI. The use cases for new iSCSI deployment are very sparse. From my perspective, the only valid use case is the integration of pre-existing legacy systems that don’t yet support NVMe over TCP

That’s why simplyblock, as an NVMe over TCP first solution, still provides iSCSI if you really need it. We offer it exactly for the reason that migrations don’t happen from today to tomorrow. Still, you want to leverage the benefits of newer technologies, such as NVMe over TCP, wherever possible. With simplyblock, logical volumes can easily be provisioned as NVMe over TCP or iSCSI devices. You can even switch over from iSCSI to NVMe over TCP later on.

In any case, you should go with NVMe over TCP when:

- You operate high-performance computing environments

- You have modern data centers with significant bandwidth

- You deploy workloads requiring low-latency, high IOPS, or throughput storage access

- You find yourself in scenarios that demand scalable, flexible storage solutions

- You are in any other situation where you need remotely attached storage

You should stay on iSCSI (or slowly migrate away) when:

- You have legacy infrastructure with limited upgrade paths

You see, there aren’t a lot of reasons. Given that, it’s just a matter of selecting your new storage solution. Personally, these days, I would always recommend software-defined storage solutions such as simplyblock, but I’m biased. Anyhow, an SDS provides the best of both worlds: commodity storage hardware (with the option to go all in with your 96-bay storage server) and performance.

Simplyblock: Embracing Versatility

Simplyblock demonstrates forward-thinking storage design by supporting both NVMe over TCP and iSCSI, providing customers with the best performance when available and the chance to migrate slowly in the case of existing legacy clients.

Furthermore, simplyblock offers features known from traditional SAN storage systems or “filesystems” such as ZFS. This includes a full copy-on-write backend with instant snapshots and clones. It includes synchronous and asynchronous replication between storage clusters. Finally, simplyblock is your modern storage solution, providing storage to dedicated hosts, virtual machines, and containers. Regardless of the client, simplyblock offers the most seamless integration with your existing and upcoming environments.

The Future of NVMe over TCP

As enterprise and cloud computing continue to evolve, NVMe over TCP stands as the technology of choice for remotely attached storage. Firstly, it combines simplicity, performance, and broad compatibility. Secondly, it provides a cost-efficient and scalable solution utilizing commodity network gear.

The protocol’s ongoing development (last specification update May 2024) and increasing adoption show continued improvements in efficiency, reduced latency, and enhanced scalability.

NVMe over TCP represents a significant step forward in storage networking technology. Furthermore, combining the raw performance of NVMe with the ubiquity of Ethernet networking offers a compelling solution for modern computing environments. While iSCSI remains relevant for specific use cases and during migration phases, NVME over TCP represents the future and should be adopted as soon as possible.

We, at simplyblock, are happy to be part of this important step in the history of storage.

Questions and Answers

Yes, NVMe over TCP is superior to iSCSI in almost any way. NVMe over TCP provides lower protocol overhead, better throughput, lower latency, and higher IOPS compared to iSCSI. It is recommended that iSCSI not be used for newly designed infrastructures and that old infrastructures be migrated wherever possible.

NVMe over TCP is superior in all primary storage metrics, meaning IOPS, latency, and throughput. NVMe over TCP shows up to 35% higher IOPS, 25% lower latency, and 20% increased throughput compared to iSCSI using the same network fabric and storage.

NVMe/TCP is a storage networking protocol that utilizes the common internet standard protocol TCP/IP as its transport layer. It is deployed through standard Ethernet fabrics and can be run parallel to existing network traffic, while separation through VLANs or physically separated networks is recommended. NVMe over TCP is considered the successor of the iSCSI protocol.

iSCSI is a storage networking protocol that utilizes the common internet standard protocol TCP/IP as its transport layer. It connects remote storage solutions (commonly hardware storage appliances) to storage clients through a standard Ethernet fabric. iSCSI was initially standardized in 2000. Many companies replace iSCSI with the superior NVMe over TCP protocol.

SCSI (Small Computer Storage Interface) is a command set that connects computers and peripheral devices and transfers data between them. Initially developed in the 1980s, SCSI has been a foundational technology for data storage interfaces, supporting various device types such as hard drives, optical drives, and scanners.

NVMe (Non-Volatile Memory Express) is a specification that defines the connection and transmission of data between storage devices and computers. The initial specification was released in 2011. NVMe is designed specifically for solid-state drives (SSDs) connected via the PCIe bus. NVMe devices have improved latency and performance than older standards such as SCSI, SATA, and SAS.