Table Of Contents

- The Latency-IOPS-QPS-TPS Connection

- Impact on Database Performance

- The Hidden Cost of Latency

- The IOPS Dance

- Real-World Database Performance Implications

- The Ripple Effect

- Breaking Free from Storage Constraints

- New Architectural Possibilities

- How does the future of database performance optimization look like?

- FAQ

TLDR: Storage and storage limitations have a fundamental impact on database performance, with access latency creating a hard physical limitation on IOPS, queries per second (QPS), and transactions per second (TPS).

With the rise of the cloud-native world of microservices, event-driven architectures, and distributed systems, understanding storage physics has never been more critical. As organizations deploy hundreds of database instances across their infrastructure, the multiplicative impact of storage performance becomes a defining factor in system behavior and database performance metrics, such as queries per second (QPS) and transactions per second (TPS).

While developers obsess over query optimization and index tuning, a more fundamental constraint silently shapes every database operation: the raw physical limits of storage access.

These limits aren’t just academic concerns—they’re affecting your systems right now. Each microservice has its own database, each Kubernetes StatefulSet, and every cloud-native application wrestles with physical boundaries, often without realizing it. When your system spans multiple availability zones, involves event sourcing, or requires real-time data processing, storage physics becomes the hidden multiplier that can either enable or cripple your entire architecture.

In this deep dive, we’ll explain how storage latency and IOPS create performance ceilings that no amount of application-level optimization can break through. More importantly, we’ll explore how understanding these physical boundaries is crucial for building truly high-performance, cloud-native systems that can scale reliably and cost-effectively.

The Latency-IOPS-QPS-TPS Connection

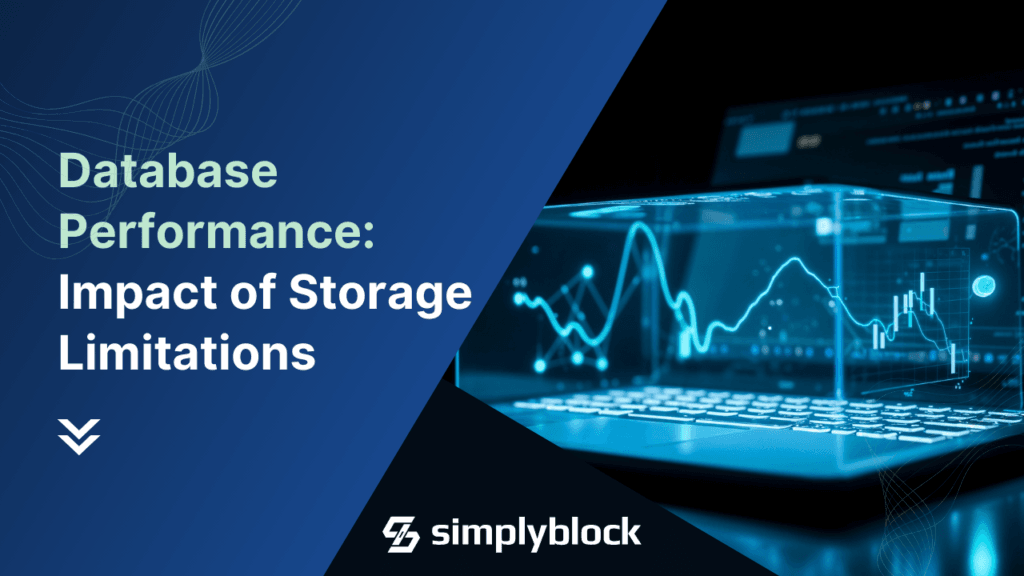

When we look at database and storage performance, there are four essential metrics to understand.

Latency (or access latency) measures how long it takes to complete a single I/O operation from issuing to answering. On the other hand, IOPS (Input/Output Operations Per Second) represents how many operations can be performed per second. Hence, IOPS measures the raw storage throughput for read/write operations.

On the database side, QPS (Queries Per Second) represents the number of query operations that can be executed per second, basically the higher-level application throughput. Last, TPS (Transactions Per Second) defines how many actual database transactions can be executed per second. A single transaction may contain one or more queries.

These metrics have key dependencies:

- Each query typically requires multiple I/O operations.

- As IOPS increases, latency increases due to queuing and resource contention.

- Higher latency constraints maximum achievable IOPS and QPS.

- The ratio between QPS and IOPS varies based on query complexity and access patterns.

- TPS is the higher-level metric of QPS. Both are directly related.

Consider a simple example:

If your storage system has a latency of 1 millisecond per I/O operation, the theoretical maximum IOPS would be 1,000 (assuming perfect conditions). However, increase that latency to 10 milliseconds, and your maximum theoretical IOPS drops to 100. Suppose each query requires an average of 2 I/O operations. In that case, your maximum QPS would be 500 at 1 ms latency but only 50 at 10 ms latency – demonstrating how latency impacts both IOPS and QPS in a cascading fashion.

1 second = 1000ms

1 I/O operation = 10ms

IOPS = 1000 / 10 = 100

1 query = 2 I/O ops

QPS = 100 / 2 = 50

The above is a simplified example. Modern storage devices have parallelism built into them, running multiple I/O operations simultaneously. However, you need a storage engine to make them available, and they only delay the inevitable.

Impact on Database Performance

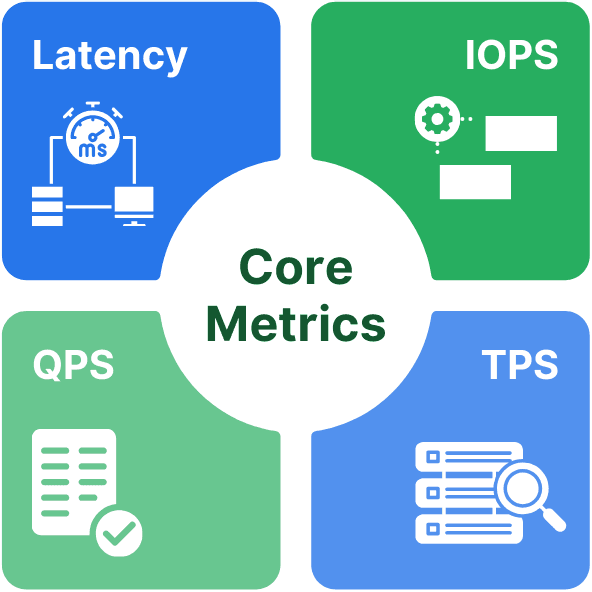

For database workloads, the relationship between latency and IOPS becomes even more critical. Here’s why:

- Query Processing Speed: Lower latency means faster individual query execution for data read from storage devices.

- Concurrent Operations: Higher IOPS enables more simultaneous database operations.

- Transaction Processing: The combination affects how many transactions per second (TPS) your database can handle.

The Hidden Cost of Latency

Storage latency impacts database operations in subtle but profound ways. Consider a typical PostgreSQL instance running on AWS EBS gp3 storage, which averages 2-4ms latency for read-write operations. While this might seem negligible, let’s break down its real impact:

Transaction Example:

- Single read operation: 3ms

- Write to WAL: 3ms

- Page write: 3ms

- fsync(): 3ms

Total latency: 12ms minimum per transaction

Maximum theoretical transactions per second: ~83

This means even before considering CPU time, memory access, or network latency, storage alone limits your database to fewer than 100 truly consistent transactions per second. Many teams don’t realize they’re hitting this physical limit until they’ve spent weeks optimizing application code with diminishing returns.

The IOPS Dance

IOPS limitations create another subtle challenge. Traditional cloud block storage solutions like Amazon EBS often struggle to simultaneously deliver low latency and high IOPS. This limitation can force organizations to over-provision storage resources, leading to unnecessary costs. For example, when running databases on AWS, many organizations provision multiple high-performance EBS volumes to achieve their required IOPS targets. However, this approach significantly underutilizes storage capacity while still not achieving optimal latency.

A typical gp3 volume provides a baseline of 3,000 IOPS. Let’s see how this plays out in real scenarios:

Common Database Operations IOPS Cost:

- Index scan: 2-5 IOPS per page

- Sequential scan: 1 IOPS per page

- Write operation: 2-4 IOPS (data + WAL)

- Vacuum operation: 10-20 IOPS per second

With just 20 concurrent users performing moderate-complexity queries, you could easily exceed your IOPS budget without realizing it. The database doesn’t stop – it just starts queueing requests, creating a cascading effect of increasing latency.

Real-World Database Performance Implications

Here’s a scenario many teams encounter:

A database server handling 1,000 transactions per minute seems to be performing well, with CPU usage at 40% and plenty of available memory. Yet response times occasionally spike inexplicably. The hidden culprit? Storage queuing:

Storage Queue Analysis:

- Average queue depth: 4

- Peak queue depth: 32

- Additional latency per queued operation: 1ms

- Effective latency during peaks: 35ms

Impact:

- 3x increase in transaction time

- Timeout errors in the application layer

- Connection pool exhaustion

The Ripple Effect

Storage performance limitations create unexpected ripple effects throughout the database system:

Connection Pool Behavior

When storage latency increases, transactions take longer to complete. This leads to connection pool exhaustion, not because of too many users, but because each connection holds onto resources longer than necessary.

Buffer Cache Efficiency

Higher storage latency makes buffer cache misses more expensive. This can cause databases to maintain larger buffer caches than necessary, consuming memory that could be better used elsewhere.

Query Planner Decisions

Most query planners don’t factor in current storage performance when making decisions. A plan that’s optimal under normal conditions might become significantly suboptimal during storage congestion periods.

Breaking Free from Storage Constraints

Modern storage solutions, such as simplyblock, are transforming this landscape. NVMe storage offers sub-200μs latency and millions of IOPS. Hence, databases operate closer to their theoretical limits:

Same Transaction on NVMe:

- Single read operation: 0.2ms

- Write to WAL: 0.2ms

- Page write: 0.2ms

- fsync(): 0.2ms

Total latency: 0.8ms

Theoretical transactions per second: ~1,250

This 15x improvement in theoretical throughput isn’t just about speed – it fundamentally changes how databases can be architected and operated.

New Architectural Possibilities

Understanding these storage physics opens new possibilities for database architecture:

Rethinking Write-Ahead Logging

With sub-millisecond storage latency, the traditional WAL design might be unnecessarily conservative. Some databases are exploring new durability models that take advantage of faster storage.

Dynamic Resource Management

Modern storage orchestrators can provide insights into actual storage performance, enabling databases to adapt their behavior based on current conditions rather than static assumptions.

Query Planning Evolution

Next-generation query planners could incorporate real-time storage performance metrics, making decisions that optimize for current system conditions rather than theoretical models.

How does the future of database performance optimization look like?

Understanding storage physics fundamentally changes how we approach database optimization and architecture. While traditional focus areas like query optimization and indexing remain essential, the emergence of next-generation storage solutions enables paradigm shifts in database design and operation. Modern storage architectures that deliver consistent sub-200μs latency and high IOPS aren’t just incrementally faster – they unlock entirely new possibilities for database architecture:

- True Horizontal Scalability: With storage no longer being the bottleneck, databases can scale more effectively across distributed systems while maintaining consistent performance.

- Predictable Performance: By eliminating storage queuing and latency variation, databases can deliver more consistent response times, even under heavy load.

- Simplified Operations: When storage is no longer a constraint, many traditional database optimization techniques and workarounds become unnecessary, reducing operational complexity.

For example, simplyblock’s NVMe-first architecture delivers consistent sub-200μs latency while maintaining enterprise-grade durability through distributed erasure coding. This enables databases to operate much closer to their theoretical performance limits while reducing complexity and cost through intelligent storage optimization.

As more organizations recognize that storage physics ultimately governs database behavior, we’ll likely see continued innovation in storage architectures and database designs that leverage these capabilities. The future of database performance isn’t just about faster storage – it’s about fundamentally rethinking how databases interact with their storage layer to deliver better performance, reliability, and cost-effectiveness at scale.

FAQ

Queries per second (QPS) in a database context measures how many read or write operations (queries) a database can handle per second.

Transactions per second (TPS) in a database context measures the number of complete, durable operations (involving one or more queries) successfully processed and committed to storage per second.

Improving database performance involves optimizing query execution, indexing data effectively, scaling hardware resources, and fine-tuning storage configurations to reduce latency and maximize throughput.

Database performance refers to how efficiently a database processes queries and transactions, delivering fast response times, high throughput, and optimal resource utilization. Many factors, such as query complexity, data model, underlying storage performance, and more, influence database performance.

Storage directly influences database performance. Factors like read/write speed, latency, IOPS capacity, and storage architecture (e.g., SSDs vs. HDDs) directly impact database throughput and query execution times.