Table Of Contents

- Simplyblock: Enterprise Storage Platform for Kubernetes

- Portworx: Kubernetes Storage and Data Management

- Ceph: Open-source, Distributed Storage System

- Longhorn: Cloud-native Block Storage for Kubernetes

- NFS: File Sharing Solution for Enterprises with Heterogeneous Environments

- Make Your Kubernetes Storage Choice in 2025

- Questions and Answers

Selecting your Kubernetes persistent storage may be tough due to the many available options. Not all are geared towards enterprise setups, though. Hence, we would like to briefly introduce 5 storage solutions for Kubernetes that may meet your enterprise’s diverse storage needs.

That said, as Kubernetes adoption keeps growing in 2025, selecting the right storage solution is more important than ever. Enterprise-level features, data encryption, and high availability are at the forefront of the requirements we want to look into. The same is true for the ability to attach to multiple clients simultaneously and the built-in data loss protection.

Simplyblock: Enterprise Storage Platform for Kubernetes

Simplyblock™ is an enterprise-grade storage platform designed to cater to high-performance and scalability needs for storage in Kubernetes environments. Simplyblock is fully optimized to take advantage of modern NVMe devices. It utilizes the NVMe/TCP protocol to share its shared volumes between the storage cluster and clients, providing superior throughput and lower access latency than the alternatives.

Simplyblock is designed as a cloud-native solution and is highly integrated with Kubernetes through its Simplyblock CSI driver. It supports dynamic provisioning, snapshots, clones, volume resizing, fully integrated encryption at rest, and many more. One benefit of simplyblock is its use of NVMe over TCP which is integrated into the Linux and Windows (Server 2025 or later) kernels, meaning no additional drivers. This also means it is easy to use Simplyblock volumes outside of Kubernetes, including on Bare-Metal Kubernetes, if you also operate virtual machines and would like to unify your storage. Furthermore, simplyblock volumes support read-write multi-attach. That means they can be attached to multiple pods, VMs, or both at the same time, making it easy to share data.

Its scale-out architecture provides full multi-tenant isolation, meaning that many customers can share the same storage backend. Logical volumes can be encrypted at rest either by an encryption key per tenant or even per logical volume, providing the strongest isolation option.

Deployment-wise, simplyblock offers the best of both worlds: disaggregated and hyperconverged setups. Simplyblock’s storage engine can be deployed on either a set of separate storage nodes, building a disaggregated storage cluster, or on Kubernetes worker nodes to utilize the worker node-local storage. Simplyblock also supports a mixed setup, where workloads can benefit from ultra-low latency with worker node-local storage (node-affinity) and the security and “spill-over” of the infinite storage from the disaggregated cluster.

As the only solution presented here, simplyblock favors erasure coding over replication for high availability and fault tolerance. Erasure coding is quite similar to RAID and uses parity information to achieve data loss protection. Simplyblock distributes this parity information across cluster nodes for higher fault tolerance. That said, erasure coding has a configuration similar to a replication factor, defining how many chunks and parity information will be used per calculation. This enables the best trade-off between data protection and storage overhead, enabling secure setups with as little as 50% additional storage requirements.

Furthermore, simplyblock provides a full multi-tier solution that caters to diverse storage needs in a single system. It enables you to utilize ultra-fast flash storage devices such as NVMe alongside slower SATA/SAS SSDs. At the same time, you can manage your long-term (cold) storage with traditional HDDs or QLC-based flash storage (slower but very high capacity).

Simplyblock is a robust choice if you need scalable, high-performance block storage for use cases such as databases, CDNs (Content Delivery Network), analytics solutions, and similar. Furthermore, simplyblock offers high throughput and low access latency. With its use of erasure coding, simplyblock is a great solution for companies seeking cost-effective storage while its ease of use allows organizations to adapt quickly to changing storage demands. For businesses seeking a modern, efficient, and Kubernetes-optimized block storage solution, simplyblock offers a compelling combination of features and performance.

Portworx: Kubernetes Storage and Data Management

Portworx is a cloud-native, software-defined storage platform that is highly integrated with Kubernetes. It is an enterprise-grade, closed-source solution that was acquired and is in active development by Pure Storage. Hence, its integration with the Pure Storage hardware appliances enables a performant, scalable storage option with integrated tiering capabilities.

Portworx integrated with Kubernetes through its native CSI driver and provides important CSI features such as dynamic provisioning, snapshots, clones, resizing, and persistent or ephemeral volumes. Furthermore, Portworx supports data-at-rest encryption and disaster recovery using synchronous and asynchronous cluster replication.

To enable fault tolerance and high availability, Portworx utilizes replicas, storing copies of data on different cluster nodes. This multiplies the required disk space by the replication factor. For the connection between the storage cluster and clients, Portworx provides access via iSCSI, a fairly old protocol that isn’t necessarily optimized for fast flash storage.

For connections between Pure’s FlashArray and Portworx, you can use NVMe/TCP or NVMe-RoCE (NVMe with RDMA over Converged Ethernet) — a mouthful, I know.

Encryption at rest is supported with either a unique key per volume or a cluster-wide encryption key. For storage-client communication, while iSCSI should be separated into its own VLAN, remember that iSCSI itself isn’t encrypted, meaning that encryption in transit isn’t guaranteed (if not pushed through a secured channel).

As mentioned, Portworx distinguishes itself by integrating with Pure Storage appliances. This integration enables organizations to leverage the performance and reliability of Pure’s flash storage systems. This makes Portworx a compelling choice for running critical stateful applications such as databases, message queues, and analytics platforms in Kubernetes, especially if you don’t fear operating hardware appliances. While available as a pure software-defined storage solution, Portworx excels in combination with Pure’s hardware, making it a great choice for databases, high-throughput message queues, and analytical applications on Kubernetes.

Ceph: Open-source, Distributed Storage System

Ceph is a highly scalable and distributed storage solution. Run as a company-backed open-source project, Ceph presents a unified storage platform with support for block, object, and file storage. That makes it a versatile choice for a wide range of Kubernetes applications.

Ceph’s Kubernetes integration is provided through the ceph-csi driver, which brings dynamically provisioned persistent volumes and automatic lifecycle management. CSI features supported by Ceph include snapshotting, cloning, resizing, and encryption.

The architecture of Ceph is built to be self-healing and self-managing, mainly designed to enable infinite disk space scalability. The provided access latency, while not on the top end, is good enough for many use cases. Running workloads like databases, which love high IOPS and low latency, can feel a bit laggy, though. This is one of the key differences highlighted in the Simplyblock vs Ceph comparison, which explores how these two solutions handle performance, scalability, and overall efficiency. Finally, high availability and fault tolerance are implemented through replication between Ceph cluster nodes.

From the security end, Ceph supports encryption at rest via a few different options. I’d recommend using the LUKS-based (Linux Unified Key Setup) setup as it supports all of the different Ceph storage options. The communication between cluster nodes, as well as storage and client, is not encrypted by default. If you require encryption in transit (and you should), utilize SSH and SSL termination via HAproxy or similar solutions. It’s unfortunate that a storage solution as big as Ceph has no such built-in support. The same goes for multi-tenancy, which can be achieved using RADOS namespaces but isn’t an out-of-the-box solution.

Ceph is an excellent choice as your Kubernetes storage when you are looking for an open-source solution with a proven track record of enterprise deployments, infinite storage scalability, and versatile storage types. It is not a good choice if you are looking for high-performance storage with low latency and millions of IOPS.

Moreover, due to its community-driven development and support, Ceph can be operated as a cost-effective and open-source alternative to proprietary storage solutions. Whether you’re deploying in a private data center, a public cloud, or a hybrid environment, Ceph’s adaptability is a great help for managing storage in containerized ecosystems.

Commercial support for Ceph is available from Red Hat.

Longhorn: Cloud-native Block Storage for Kubernetes

Longhorn is an open-source, cloud-native storage solution specifically designed for Kubernetes. It provides block storage that focuses on flexibility and ease of use. Therefore, Longhorn deploys straight into your Kubernetes cluster, providing worker node-local storage as persistent volumes.

As a cloud-native storage solution, Longhorn provides its own CSI driver, highly integrated with Longhorn and Kubernetes. It enables dynamic provisioning and management of persistent volumes, snapshots, clones, and backups. For the latter, people seem to have some complications with restores, so make sure to test your recovery processes.

For communication between storage and clients, Longhorn uses the iSCSI protocol. A newer version of the storage engine is in the works, which enables NVMe over TCP, however, at the time of writing, this engine isn’t yet production-ready and is not recommended for production use.

Anyhow, Longhorn provides good access latency and throughput, making it a great solution for mid-size databases and similar workloads. Encryption at rest can be set up but isn’t as simple as with some alternatives. High availability and fault tolerance is achieved by replicating data between cluster nodes. That means, as with many other solutions, the required storage is multiplied by the replication factor. However, Longhorn supports incremental backups to external storage systems like S3 for easy data protection and fast recoverability in disaster scenarios.

Longhorn is a full open-source project under the Cloud Native Computing Foundation (CNCF). It was originally developed by Rancher and is backed by SUSE. Hence, it’s commercially available with enterprise support as SUSE Storage.

Longhorn is a good choice if you want a lightweight, cloud-native, open-source solution that runs hyper-converged with your cluster workloads. It is usually used for smaller deployments or home labs — widely discussed on Reddit. Generally, it is not considered as robust as Ceph and, hence, is not recommended for mission-critical enterprise production workloads.

NFS: File Sharing Solution for Enterprises with Heterogeneous Environments

NFS (Network File System) is a well-known and widely adopted file-sharing protocol, inside and outside Kubernetes. That said, NFS has a proven track record showing its simplicity, reliability, and ability to provide shared access to persistent storage.

One of the main features of NFS is its ability to simultaneously attach volumes to many containers (and pods) with read-write access. That enables easy sharing of configuration, training data, or similar shared data sets between many instances or applications.

There are quite a few different options for integrating NFS with Kubernetes. The two main ones are the Kubernetes NFS Subdir External Provisioner. Both automatically create NFS subdirectories when new persistent volumes are requested, and the csi-driver-nfs. In addition, many storage solutions provide optimized NFS CSI drivers designed to provision shares for their respective solutions automatically. Such storage options include TrueNAS, OpenEBS, Dell EMC, and others.

High availability is one of the elements of NFS that isn’t simple, though. To make automatic failover work, additional tools like Corosync or Pacemaker need to be configured. On the client side, automount should be set up to handle automatic failover and reconnection. NFS is an old protocol from a time when those additional steps were commonplace. Today, they feel frumpy and out of place, especially compared to available alternatives.

While multi-tenancy isn’t strictly supported by NFS, using individual shares could be seen as a viable solution. However, remember that shares aren’t secured in any way. Authentication requires additional setups such as Kerberos. File access permissions shouldn’t be used as a sufficient setup for tenant isolation.

Encryption at rest with NFS comes down to the backing storage solution. NFS, as a sharing protocol, doesn’t offer anything by itself. Encryption in transit is supported, either via Kerberos or other means like TLS via stunnel. The implementation details differ per NFS provider, though. You should consult your provider’s manual.

NFS is your Kubernetes storage of choice if you need a simple, scalable, and shared file storage system that integrates seamlessly into your existing infrastructure. In the best case, you already have an NFS server set up and ready to go. Installing the CSI driver and configuring the storage class is all you need. While NFS might be a bottleneck for high-performance systems such as databases, many applications work perfectly fine. Imagine you need to scale out a WordPress-based website. There isn’t an easier way to share the same writable storage to many WP instances. That said, for organizations looking for a mature, battle-tested storage option to deliver shared storage with minimal complexity, NFS is the choice.

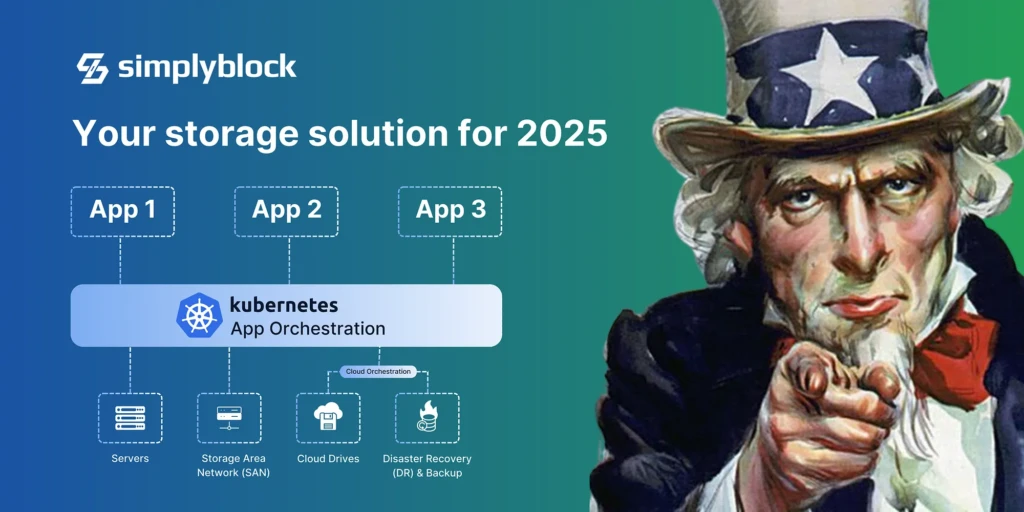

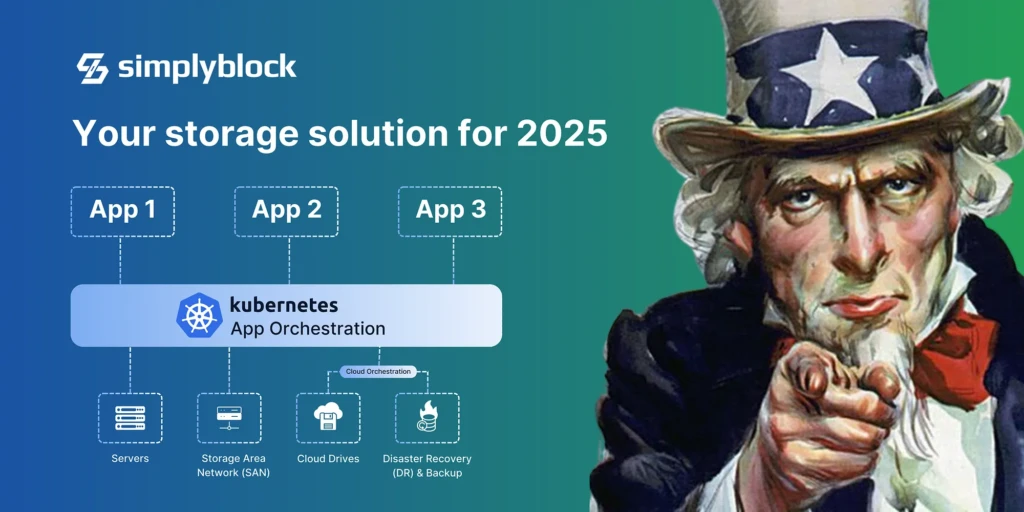

Make Your Kubernetes Storage Choice in 2025

Selecting the right storage solution for your Kubernetes persistent volume isn’t easy. It is an important choice to ensure performance, scalability, and reliability for your containerized workloads. Solutions like simplyblock™, Portworx, Ceph, Longhorn, and NFS offer a great set of features and are optimized for different use cases.

NFS is loved for its simplicity and easy multi-attach functionality. It is a great choice for all use cases needing shared write access. It’s not a great fit for high throughput and super-low access latency, though.

Ceph, on the other hand, is great if you need infinite scalability and don’t fear away from a slightly more complicated setup and operation. Ceph provides a robust choice for all use cases, as well as high-performance databases and similar IO-intensive applications.

Longhorn and Portworx are generally good choices for almost all types of applications. Both solutions provide good access latency and throughput. If you tend to buy hardware appliances, Portworx, in combination with Pure Storage, is the way to go. If you prefer pure software-defined storage and want to utilize storage available in your worker nodes, take a look at Longhorn.

Last but not least, simplyblock is your choice when running IO-hungry databases in or outside Kubernetes. Its use of the NVMe/TCP protocol makes it a perfect choice for pure container storage, as well as mixed environments with containers and virtual machines. Due to its low storage overhead for data protection, simplyblock is a great, cost-effective, and fast storage solution. And a bit of capacity always remains for all other storage needs, meaning a single solution will do it for you.

As Kubernetes evolves, leveraging the proper storage solution will significantly improve your application performance and resiliency. To ensure you make an informed decision for your short and long-term storage needs, consider factors like workload complexity, deployment scale, and data management needs.

Whether you are looking for a robust enterprise solution or a more simple and straightforward setup, these five options are all strong contenders to meet your Kubernetes storage demands in 2025.

Questions and Answers

The best Kubernetes storage in 2025 depends on your workload, but options like simplyblock stand out for their NVMe-over-TCP integration, dynamic provisioning, and Kubernetes-native architecture—ideal for high-performance, scalable, cloud-native apps.

Ceph offers flexibility, Longhorn is Kubernetes-focused, but simplyblock provides superior performance with built-in NVMe-over-TCP support, instant snapshots, and multi-tenant isolation. It’s designed for modern environments and optimized for databases on Kubernetes.

NVMe-over-TCP delivers high throughput and low latency over standard Ethernet, making it ideal for scalable containerized workloads. It’s the key to combining local SSD-like performance with remote storage flexibility in Kubernetes clusters.

Legacy SAN and NAS solutions often lack Kubernetes-native integration and dynamic provisioning support. Instead, software-defined storage platforms like Simplyblock are more agile, scalable, and cloud-friendly for containerized environments.

A robust CSI driver should support dynamic provisioning, snapshots, encryption, and replication. Simplyblock’s CSI plugin also supports volume resizing and high-performance backends, making it ideal for stateful Kubernetes workloads.