During the last decade containerized services have emerged as the game-changer in terms of deployment simplicity, scalability, and componentization or modularization. Kubernetes is at the forefront of this movement, providing the key components for powerful deployment, orchestration and self-healing, and elasticity.

During this time, Kubernetes (often abbreviated as k8s) not only managed to defeat some of the competitors but also materialized as the de facto standard. Originally developed by Google as the successor to Bork, a Google internal deployment and orchestration platform, it was released to the public in 2014 and quickly gained widespread adoption due to its ability to automate the deployment, scaling, and management of containerized applications.

Containerization

The single most important component for us as a user of k8s is the container. A container is a lightweight, portable unit, encapsulating an application and its dependencies, ensuring consistency across different environments. Dependencies in this situation mean libraries, files, anything necessary to run the service.

Using componentization or modularization, a larger system is broken down into many smaller containerized services, deployed independently, commonly communicating through HTTP, message brokers, or similar technologies.

While containers bring numerous benefits, managing them at scale can be very complex. This is where Kubernetes comes in, providing a robust solution for automating the deployment, scaling, and operation of our application containers.

Kubernetes Components

Kubernetes offers a set of components as building blocks for our application. Those components aren’t actual services itself, but map different elements of our deployments into their related Kubernetes services. These elements are often provided as CRDs (Custom Resource Definitions), defining the available capabilities. User deployed services can provide additional CRDs, extending the core platform with additional building blocks.

The main available components are: Pods: The smallest deployable units in Kubernetes, encapsulating one or more containers. Services: Enables to expose a network application which consists of one or more pods. ReplicaSets: Ensures a specified number of replicas of a pod are running at all times for fault tolerance and scalability. Deployments: A higher-level abstraction that manages ReplicaSets, making it easier to scale and update applications. ConfigMaps and Secrets: Manage configuration data and sensitive information separately from application code. Ingress: Manages external access to services within a cluster, offering HTTP and HTTPS routing. Volumes: Provides access to various storage technologies (persistent and ephemeral) from containers.For those interested in the latest advancements in Kubernetes storage, explore new storage features that enhance performance, scalability, and flexibility.

Core Services

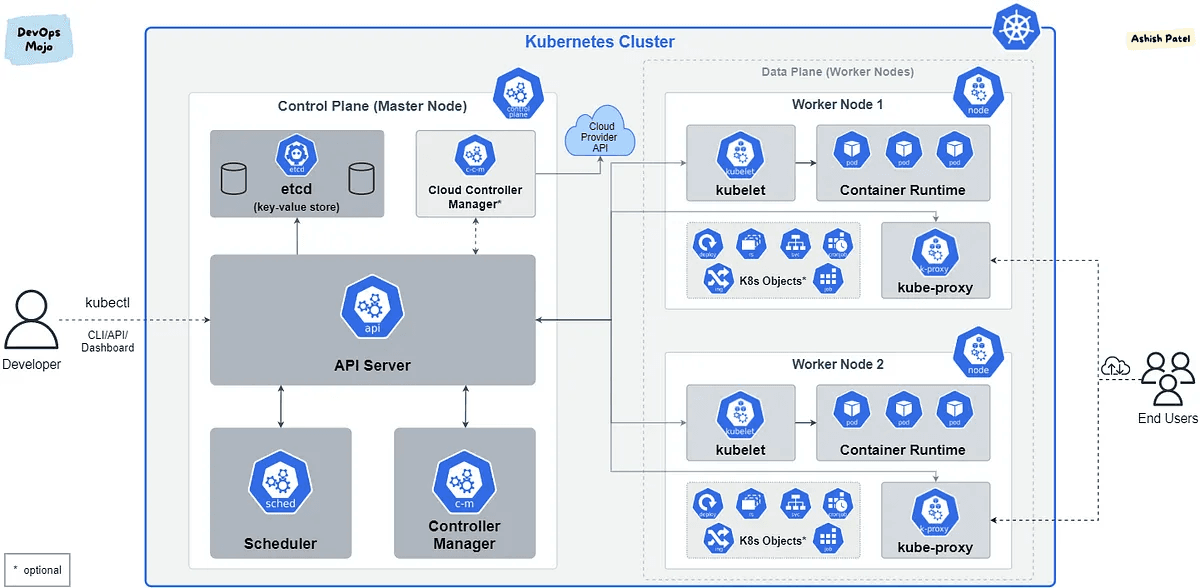

In the first couple of years people loved to point out how easy Kubernetes is. And while it looks simple from its surface (and more specifically when everything works), Kubernetes itself consists of many components, modularized and containerized. Those components provide the services required for the platform to offer and perform its functionality.

That said, Kubernetes isn’t a single application in itself, but a set of services working together to make up the platform. Those services include the internal, stateful database, the API server itself, network proxies, the scheduler, and many more.

If you want to know more, Kavishka Fernando from WSO2 wrote a great article about the inner services that make up the Kubernetes platform

Why Kubernetes?

While Kubernetes is complex internally, most of the complexity is hidden behind well-defined interfaces, making it “easy” to use. While it has a steep learning curve, the benefits outweigh the learning effort. Kubernetes allows effortless scaling of applications by adding or removing pods based on demand and can run on various infrastructures, including Bare-Metal Kubernetes, for maximum performance and efficiency. Resilience: With automated load balancing and self-healing capabilities, Kubernetes ensures applications remain available and responsive. Portability: Applications running on Kubernetes are highly portable, easily movable between development, testing, and production environments. Declarative Configuration: Kubernetes uses declarative configuration files, enabling developers to specify the desired state of their applications and leaving Kubernetes to handle the details of implementation.

Kubernetes is here to Stay

In our fast-paced (IT) world, Kubernetes provides a strong platform to use as the basis for large scale deployments, consisting of many user components. At the same time, Kubernetes enables the use of many typical elements necessary to run our components, such as networking, container (pod) scheduling, access to configuration and secrets, and short- or long-term storage.

Requirements in engineering and operation change quickly though, trying to keep up with demands and growing users. That said, during the last decade Kubernetes itself evolved with the needs of us users, and it’ll keep evolving.

If you deploy your application into Kubernetes and you need persistent storage that is not only low, predictable latency, but can also scale to high performance with many IOPS, look no further than simplyblock. Learn more about how we help you, and if you’re ready to give it a spin, our simple pricing makes it easy to get started right away.

Questions and Answers

Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It’s crucial for cloud-native infrastructure because it enables efficient resource utilization, self-healing, and seamless scalability. Learn how it supports stateful workloads with persistent storage.

The control plane in Kubernetes manages the cluster state and schedules workloads. It includes components like the API server, scheduler, controller manager, and etcd. Together, they maintain the desired state of applications, ensuring resilience and consistency across nodes.

Kubernetes nodes are machines—virtual or physical—that run containerized applications. Each node includes the kubelet, kube-proxy, and a container runtime. Nodes execute the workloads scheduled by the control plane and communicate with the Kubernetes API.

Storage in Kubernetes is complex due to the dynamic nature of containers. Persistent storage needs to integrate with container lifecycles. Solutions like the Container Storage Interface (CSI) standardize how storage systems expose volumes to Kubernetes, but implementation still varies across environments.

Choosing the right storage backend depends on performance, scalability, and reliability needs. Many modern workloads benefit from NVMe-based systems like NVMe over TCP due to their high IOPS and low latency, making them ideal for Kubernetes environments.