Table Of Contents

NVMe, or Non-Volatile Memory Express, is a modern access and storage protocol for flash-based solid-state storage. Designed for low overhead, latency, and response times, it aims for the highest achievable throughput. With NVMe over TCP, NVMe has its own successor to the familiar iSCSI.

While commonly found in home computers and laptops (M.2 factor), it is designed from the ground up for all types of commodity and enterprise workloads. It guarantees fast load times and response times, even in demanding application scenarios.

The main intention in developing the NVMe storage protocol was to transfer data through the PCIe (PCI Express) bus. Since the low-overhead protocol, more use cases have been found through NVMe specification extensions managed by the NVM Express group. Those extensions include additional transport layers, such as Fibre Channel, Infiniband, and TCP (collectively known as NVMe-oF or NVMe over Fabrics).

How does NVMe Work?

Traditionally, computers used SATA or SAS (and before that, ATA, IDE, SCSI, …) as their main protocols for data transfers from the disk to the rest of the system. Those protocols were all developed when spinning disks were the prevalent type of high-capacity storage media.

NVMe, on the other hand, was developed as a standard protocol to communicate with modern solid-state drives (SSDs). Unlike traditional protocols, NVMe fully takes advantage of SSDs’ capabilities. It also provides support for much lower latency due to the missing repositioning of read-write heads and rotating spindles.

The main reason for developing the NVMe protocol was that SSDs were starting to experience throughput limitations due to the traditional protocols SAS and SATA.

Anyhow, NVMe communicates through a high-speed Peripheral Component Interconnect Express bus (better known as PCIe). The logic for NVMe resides inside the controller chip on the storage adapter board, which is physically located inside the NVMe-capable device. This board is often co-located with controllers for other features, such as wear leveling. When accessing or writing data, the NVMe controller talks directly to the CPU through the PCIe bus.

The NVMe standard defines registers (basically special memory locations) to control the protocol, a command set of possible operations to be executed, and additional features to improve performance for specific operations.

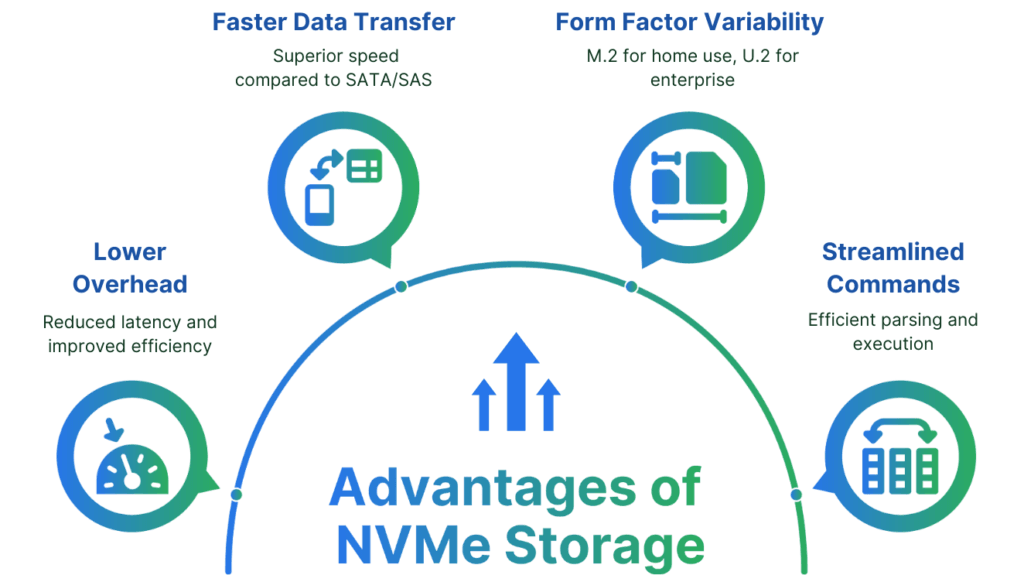

What are the Benefits of NVMe Storage?

Compared to traditional storage protocols, NVMe has much lower overhead and is better optimized for high-speed and low-latency data access.

Additionally, the PCI Express bus can transfer data faster than SATA or SAS links. That means that NVMe-based SSDs provide a latency of a few microseconds over the 40-100ms for SATA-based ones.

Furthermore, NVMe storage comes in many different packages, depending on the use case. That said, many people know the M.2 form factor for home use, however it is limited in bandwidth due to the much fewer available PCIe lanes on consumer-grade CPUs. Enterprise NVMe form factors, such as U.2, provide more and higher capacity uplinks. These enterprise types are specifically designed to sustain high throughput for ambiguous data center workloads, such as high-load databases or ML / AI applications.

Last but not least, NVMe commands can be streamlined, queued, and multipath for more efficient parsing and execution. Due to the non-rotational nature of solid-state drives, multiple operations can be executed in parallel. This makes NVMe a perfect candidate for tunneling the protocol over high-speed communication links.

What is NVMe over Fabrics (NVMe-oF)?

NVMe over Fabrics is a tunneling mechanism for access to remote NVMe devices. It extends the performance of access to solid-state drives over traditional tunneling protocols, just like iSCSI.

NVMe over Fabrics is directly supported by the NVMe driver stacks of common operating systems, such as Linux and Windows (Server), and doesn’t require additional software on the client side.

At the time of writing, the NVM Express group has standardized the tunneling of NVMe commands through the NVMe-friendly protocols Fibre Channel, Infiniband, and Ethernet, or more precisely, over TCP.

NVMe over Fibre Channel (NVMe/FC)

NVMe over Fibre Channel is a high-speed transfer that connects NVMe storage solutions to client devices. Fibre Channel, initially designed to transport SCSI commands, needed to translate NVMe commands into SCSI commands and back to communicate with newer solid-state hardware. To mitigate that overhead, the Fibre Channel protocol was enhanced to natively support the transport of NVMe commands. Today, it supports native, in-order transfers between NVMe storage devices across the network.

Due to the fact that Fibre Channel is its own networking stack, cloud providers (at least none of my knowledge) don’t offer support for NVMe/FC.

NVMe over TCP (NVMe/TCP)

NVMe over TCP provides an alternative way of transferring NVMe communication through a network. In the case of NVMe/TCP, the underlying network layer is the TCP/IP protocol, hence an Ethernet-based network. That increases the availability and commodity of such a transport layer beyond separate and expensive enterprise networks running Fibre Channel.

NVMe/TCP is rising to become the next protocol for mainstream enterprise storage, offering the best combination of performance, ease of deployment, and cost efficiency.

Due to its reliance on TCP/IP, NVMe/TCP can be utilized without additional modifications in all standard Ethernet network gear, such as NICs, switches, and copper or fiber transports. It also works across virtual private networks, making it extremely interesting in cloud, private cloud, and on-premises environments, specifically with public clouds with limited network connectivity options.

NVMe over RDMA (NVMe/RDMA)

A special version of NVMe over Fabrics is NVMe over RDMA (or NVMe/RDMA). It implements a direct communication channel between a storage controller and a remote memory region (RDMA = Remote Direct Memory Access). This lowers the CPU overhead for remote access to storage (and other peripheral devices). To achieve that, NVMe/RDMA bypasses the kernel stack, hence it mitigates the memory copying between the driver stack, the kernel stack, and the application memory.

NVMe over RDMA has two sub-protocols: NVMe over Infiniband and NVMe over RoCE (Remote Direct Memory Access over Converged Ethernet). Some cloud providers offer NVMe over RDMA access through their virtual networks.

How does NVMe/TCP Compare to ISCSI?

NVMe over TCP provides performance and latency benefits over the older iSCSI protocol. The improvements include about 25% lower protocol overhead, meaning more actual data can be transferred with every TCP/IP packet, increasing the protocol’s throughput.

Furthermore, NVMe/TCP enables native transfer of the NVMe protocol, removing multiple translation layers between the older SCSI protocol (which is used in iSCSI, hence the name) and NVMe.

That said, the difference is measurable. Blockbridge Networks, a provider of all-flash storage hardware, did a performance benchmarking of both protocols and found a general improvement of access latency of up to 20% and an IOPS improvement of up to 35% using NVMe/TCP over iSCSI to access the remote file storage.

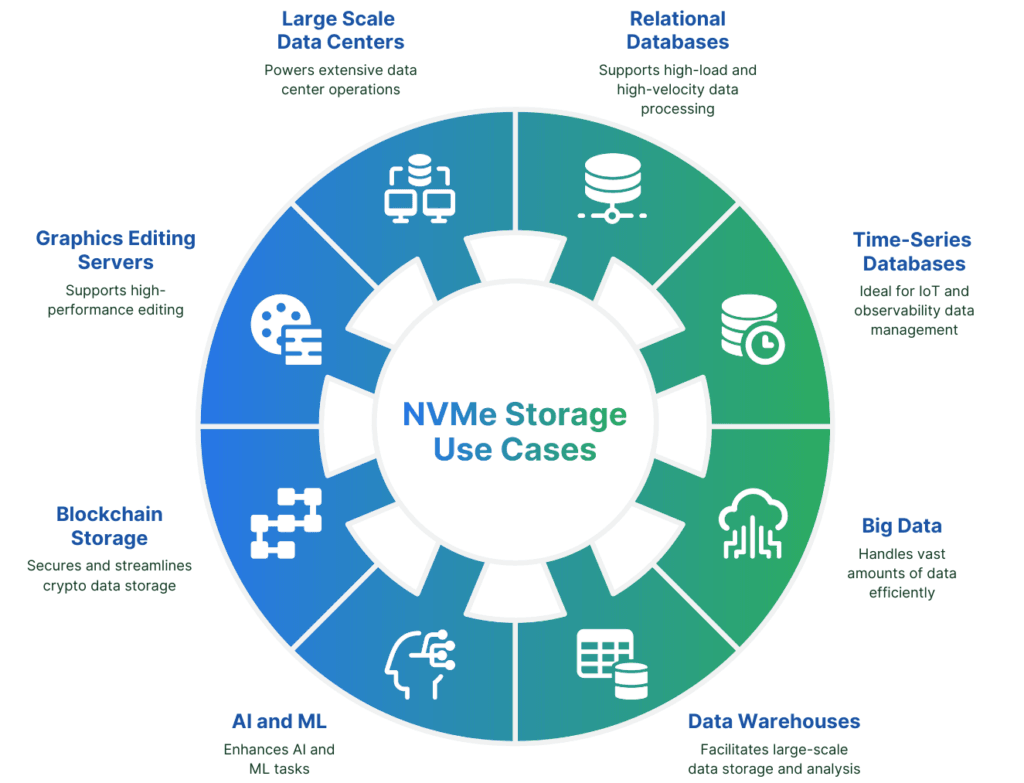

Use Cases for NVMe Storage?

NVMe storage’s benefits and ability to be tunneled through different types of networks (including virtual private networks in cloud environments through NVMe/TCP) open up a wide range of high-performance, latency-sensitive, or IOPS-hungry use cases.

Relational Databases with high load or high-velocity data. That includes:

- Time-series databases for IoT or Observability data

- Big Data

- Data Warehouses

- Analytical databases

- Artificial Intelligence (AI) and Machine Learning (ML)

- Blockchain storage and other Crypto use cases

- Large-scale data center storage solutions

- Graphics editing storage servers

The Future is NVMe Storage

No matter how we look at it, the amount of data we need to transfer (quickly) from and to storage devices won’t shrink. NVMe is the current gold standard for high-performance and low-latency storage. Making NVMe available throughout a network and accessing the data remotely is becoming increasingly popular over the still prevalent iSCSI protocol. The benefits are imminent whenever NVMe-oF is deployed.

The storage solution by simplyblock is designed around the idea of NVMe being the better way to access your data. Built from the ground up to support NVMe throughout the stack, it combines NVMe solid-state drives into a massive storage pool. It creates logical volumes, with data spread around all connected storage devices and simplyblock cluster nodes. Simplyblock provides these logical volumes as NVMe over TCP devices, which are directly accessible from Linux and Windows. Additional features such as copy-on-write clones, thin provisioning, compression, encryption, and more are given.

If you want to learn more about simplyblock, read our feature deep dive. You want to test it out, then get started right away.

Questions and Answers

NVMe (Non-Volatile Memory Express) is a storage protocol designed specifically for high-speed SSDs, typically connected through the PCIe interface. Unlike older protocols like SATA or SCSI, NVMe offers significantly lower latency and higher IOPS, making it ideal for performance-critical workloads like databases and analytics.

While traditional SSDs use SATA or SAS interfaces, NVMe SSDs connect directly via PCIe, enabling faster data transfer. NVMe provides parallelism, reduced overhead, and direct CPU communication—offering up to 6x performance over SATA SSDs in real-world use cases.

NVMe over TCP extends NVMe’s performance benefits over standard Ethernet networks using the TCP/IP protocol. It allows remote access to NVMe storage with near-local performance, making it ideal for cloud-native environments.

Stateful Kubernetes workloads like databases, AI/ML models, and analytics pipelines require fast, consistent I/O. NVMe storage reduces bottlenecks and improves responsiveness, especially when combined with software-defined storage platforms like simplyblock.

Yes. With higher performance per dollar and lower latency, NVMe enables better hardware utilization and faster job completion. Solutions like simplyblock for AWS use NVMe to cut IOPS costs and optimize cloud spend.